Interactive Simulations of Biohybrid Systems

- 1Human-Computer Interaction, University of Würzburg, Würzburg, Germany

- 2Organic Computing, University of Augsburg, Augsburg, Germany

In this article, we present approaches to interactive simulations of biohybrid systems. These simulations are comprised of two major computational components: (1) agent-based developmental models that retrace organismal growth and unfolding of technical scaffoldings and (2) interfaces to explore these models interactively. Simulations of biohybrid systems allow us to fast forward and experience their evolution over time based on our design decisions involving the choice, configuration and initial states of the deployed biological and robotic actors as well as their interplay with the environment. We briefly introduce the concept of swarm grammars, an agent-based extension of L-systems for retracing growth processes and structural artifacts. Next, we review an early augmented reality prototype for designing and projecting biohybrid system simulations into real space. In addition to models that retrace plant behaviors, we specify swarm grammar agents to braid structures in a self-organizing manner. Based on this model, both robotic and plant-driven braiding processes can be experienced and explored in virtual worlds. We present an according user interface for use in virtual reality. As we present interactive models concerning rather diverse description levels, we only ensured their principal capacity for interaction but did not consider efficiency analyzes beyond prototypic operation. We conclude this article with an outlook on future works on melding reality and virtuality to drive the design and deployment of biohybrid systems.

Biohybrid systems, i.e., the cross-fertilization of robotic entities and plants, take robotic control and interconnected technologies a significant step beyond the design, planning, manufacture, and supply of complex products. Instead of pre-defined blueprints and manufacturing processes that fulfill certain target specifications, biohybrid systems consider, even make use of the variability of living organisms. By promoting and guiding the growth and development of plants, the characteristics exhibited throughout their life cycles become part of the system—from esthetic greenery over load-bearing and energy-saving structural elements to the potential supply of nourishment. At the same time, biohybrid systems are feedback-controlled systems which means that (1) deviations of the individual plant, e.g., in terms of its health or developmental state, or (2) unexpected environmental trends, e.g., in terms of climatic conditions or regarding changes in the built environment, as well as (3) changes in the target specifications, can be compensated for. These traits of robustness, adaptivity, and flexibility in combination with a potential longevity that may easily outlast a human lifetime, may very well render biohybrid systems a key technology in shaping the evolution of man-kind. However, comprehensive basic research has to be conducted in order to arrive at state mature enough to deploy and benefit from a biohybrid system outside of laboratory conditions.

While it may seem obvious that the primary concerns of an according research agenda are the properties and interactions of plants and robots, the resulting systems pose an intriguing challenge also in terms of user interaction. Their expectable non-linearity as well as their strong dependency of concrete environmental location and condition demand for an according comprehensive, flexible, and location-dependent planning process: the designer should be empowered to travel to a prospective deployment site for a biohybrid system, investigate its various possible configurations in the given context and probe its potential impact over time. This capability can only be realized based on several technological requirements. It implies that given a specific parameter set, we need plausible predictions about the development of the system. Changes to these parameters need to be considered as well. In addition, the corresponding, dynamic simulation has to leave a small computational footprint so that the user can rely light-weight mobile computing devices to evaluate designs at the very locations where the biohybrid systems should be deployed. Furthermore, these simulations have to run at real-time speed so that various impact factors that the designer foresees can be considered in the scope of one or many simulations. Serving the simulated development of a biohybrid system in situ not only challenges the systems’ engineers in terms of computational efficiency—the in situ projection also needs to be supported by an accessible user interface which considers the intricacies of biohybrid systems as well as the complexities of their physical environment.

In this article, we present our ongoing efforts toward according technologies at the intersection of biohybrid systems and their human users. Our goal is to simulate biohybrid systems in realtime and to make these simulations interactive. Prototyping, planning, and deployment of biohybrid system configurations represent the immediate use cases for the corresponding real-time interactive simulations. Accordingly, our approach considers real-time-capable simulation models of plant growth and dynamics as well as robotic interactions. Generative models such as L-Systems and generic agent-based modeling approaches paved the way for the models we devised for interactive biohybrid simulations. We briefly survey these preceding works in Section 1. Next, we introduce our interactive modeling approach for biohybrid systems in Section 2. More specifically, we adjust a swarm grammar representation to incorporate various developmental behaviors of plants such as lignification, phototropism, and shade avoidance. We also utilize the agent-based swarm grammar approach to develop futuristic models of robotic units braiding scaffolding structures as currently worked on in the biohybrids research community. In Section 3, we present an augmented reality (AR) prototype for the design of biohybrid systems. The specific challenges introduced by the augmented reality setting, such as remodeling real-world lighting conditions or limited input capabilities are overcome in a virtual reality (VR) prototype presented in Section 4. Another advantage of VR is that the development and effect of a biohybrid system can be experienced in the context of arbitrary (virtual) environments, no matter how remote or futuristic they may be. For now, it also allows us to focus on the design of concrete user interfaces for selecting and configuring biohybrid components and to navigate through the simulation process. We conclude this article with a summary and an outlook on future work in this field.

1. Generative Models

At the core of the virtual or augmented, projected biohybrid system prototypes that we present in this article lie various generative models that drive the development and growth of robotic and plant-based structures. In this section, we summarize preceding works in the field of generative modeling. First, we briefly explain the general idea of procedural generation of content. Often, it is used in the context of creating computer graphics assets, for instance for three-dimensional terrains or detail-heavy textures. Next, we introduce L-systems, a generative modeling approach that translates basic biological proliferation into a formal representation which, in turn, can be geometrically interpreted and visualized (Prusinkiewicz and Hanan, 2013). L-systems define the state-of-the-art in generating three-dimensional assets of plants but are also widely applied in other contexts—from breeding novel hardware designs (Tyrrell and Trefzer, 2015) to the encoding of artificial neural networks (de Campos et al., 2015). L-systems have been extended in various ways to support dependencies to the environment or specific behaviors in development, such as gravitropism or crawling. Swarm grammars represent the most open and flexible extension of L-systems as the plants’ tips as well as the grown stem segments, leaves, etc. are considered agents that can react to their environment in arbitrary ways, also in real time. Therefore, we chose swarm grammars as the basic representation for our interactive biohybrid experiments.

1.1. Procedural Content Generation

Interactive systems such as the ones that we present in this article are—from a perspective of technology—rather close to video and computer games. They have to calculate and render models at high speeds to ensure that there are no lags for the user’s camera view(s). They also have to provide means for interaction and provide adequate responses both regarding the behaviors of the simulated system and its visualization. Overall, the requirements for procedural content generation (PCG) approaches are very similar in games and in our application scenario. Shaker et al. (2016) detail PCG approaches that are frequently used in the context of computer games. They understand PCG as algorithmically creating contents, whereas user input only played a minor role, if involved at all. In particular, one distinguishes between utilizing PCG to generate contents before a game is played or a simulation is run (online vs. offline). The PCG content is considered necessary if it plays an instrumental role in the interactive scenario. Otherwise, if it is only meant as eye-candy, it is optional. Depending on the information that is fed into the PCG machinery, the approach can be classified as either driven by random seeds or by parameter vectors that may determine one or the other parameter range or provide constant values. The way this data informs the PCG algorithm(s) may be deterministic, i.e., it reliably produces identical results at each run, or stochastic, and vary in its output accordingly. Furthermore, a PCG approach may be constructive which means that compliance with a certain goal or satisfaction of a set of given constraints is ensured while an artifact is created. The alternative would be to generate an artifact first and test whether it fulfills the required criteria afterward, which is referred to as generate-and-test.

1.2. Functions, Reactions, Behaviors

Depending on the overarching goals, different approaches lend themselves better for generating contents than others. For instance, there are several methods for generating artificial landscape terrains—from smooth to rugged, even to sharp surfaces. Midpoint displacement or diamond-square, for instance, is simple equation that recursively divides line segments to determine values on a height map dependent on neighboring points (Rankin, 2015). A considerable improvement can further be achieved, when considering external forces such as erosion (Cristea and Liarokapis, 2015).

While physicality plays an enormous role, complex structures in nature often emerge from organismal behaviors. These may be simple, repetitive, reactive such as the habitual secretion of calcium deposits, which results in the formation of molluscan shells or skeletons of the corals (Thompson, 2008). As soon as cellular proliferation and differentiation is considered, complex branching structures can emerge. L-systems are a computational representation that effectively abstract the complexity of organismal growth yet are capable to retrace the development of complex forms (Lindenmayer, 1971; Prusinkiewicz and Lindenmayer, 1996).

1.3. L-Systems

In L-systems, a symbol or character represents a biological cell at a specific state or of a specific type. Production rules, similar to those associated with formal grammars, determine which other state/type a cell will transition into in the next iteration. As a cell may also reproduce, a single cell may yield two or more new cells. Considering that transitions may not be fully deterministic, probabilities could be associated with such production rules. Some transitions might also be triggered by a cell’s context. According context-sensitive rules require two or more cells to be present, possibly in a specific state, to trigger a transition, etc. There are a large number of variations of production rules and their respective impact on the emergent processes and artifacts. No matter which specific L-system one implements, they all have in common that based on an initial axiom and a set of production rules a string is iteratively generated by applying all fitting rules in parallel at each step of the algorithm.

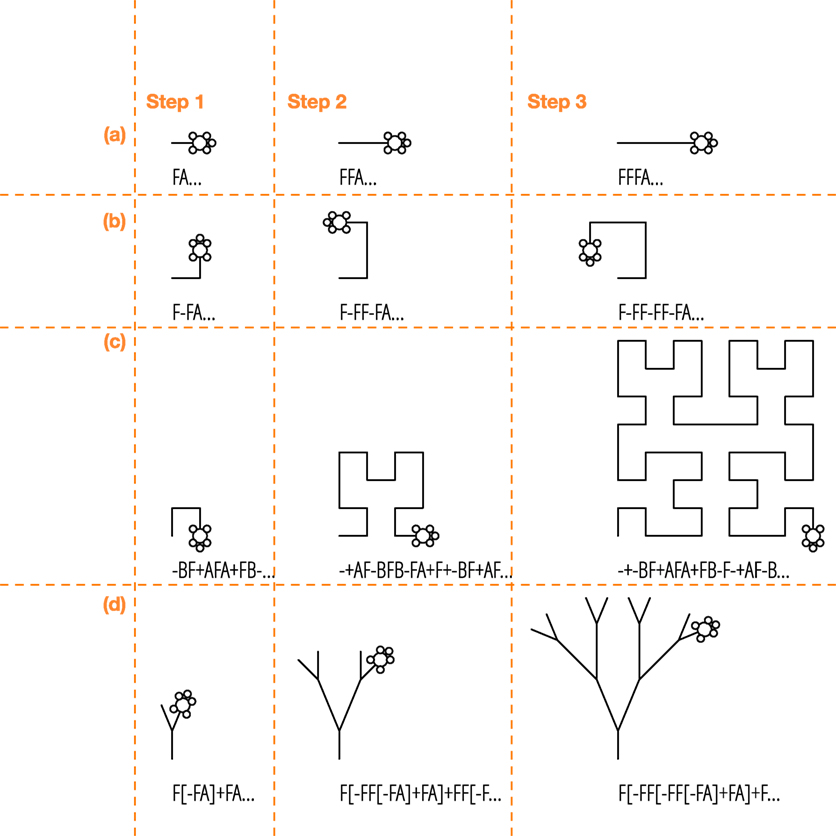

At each iteration, the generated string can be interpreted graphically: the so-called “turtle interpretation” algorithm steps through the symbols of the string and considers them instructions for a turtle to turn and walk by a certain degree or distance. Figure 1 shows the first three steps of four different L-systems illustrated by means of the turtle interpretation. The production rules of L-systems substitute any symbols in accordance with the respective rules. For instance, an initial “A” might have been replaced by “AB” at the next step. For the turtle interpretation, the set of symbols may, for instance, include F for “forward,” − for “turn left,” + for “turn right,” [ for “remember position” and ] for “resume last position.” In Figure 1A, a rather simple rule set repeatedly replaces the initial symbol, or axiom, A with FA, whereas A does not carry a graphical meaning. Next, in Figure 1B, the orientation of the turtle is instructed by introducing hyphens. The L-system in Figure 1C is simply a bit more involved than (b); whereas in (d), the bracketing concept has been introduced, which results in according branching structures.

Figure 1. The first three production steps of four different L-systems (A–D) are illustrated by means of the turtle interpretation.

0L, 1L, and 2L-systems are the basic classes of L-systems discussed in light of Chomsky’s hierarchy of formal languages. 0L-systems are context-free and do not consider interdependencies between individual cells, whereas the other classes offer production rules that consider the substitution of individuals cells in the context of one, respectively, two neighboring cells. As an example, the simplest, context-free L-system has rules , whereas p ∈ Ω is a symbol of an alphabet Ω, and s ∈ Ω* represents a word over Ω or an empty symbol. With probability θ, p is substituted by s. There is a multitude of extensions to L-systems, including parameterized L-systems, which introduce scalars into the otherwise symbolic rules. These values can, for instance, be used to encode continuous changes of organismal development. Parameterization of the L-system rules also allows to introduce constraints that link developmental processes or let them interact with the environment.

Graph-based representations are rather expressive and in 1970s, according extensions to L-systems were already presented (Culik and Lindenmayer, 1976). These efforts were resumed in the early 2000s to devise relational graph grammars (RGGs) a rather flexible implementation of the L-system idea (Kniemeyer et al., 2004). In RGGs, parametric L-systems are extended with object-oriented, rule-based, procedural features, which allow to retrace various forms of L-systems, generating arbitrary cellular topologies, and even modeling other, rather process-oriented representations such as cellular automata or artificial chemistries. The integration of aspects of development and of interaction supports modeling organisms such as plants considering both their structure and their function (Kniemeyer et al., 2006, 2008). L-systems have, of course, already been used in the context of real-time interactive systems as well. In one particular instance, an efficient implementation allows the user to generate strings and interpret them visually fast enough as to explore the model space and adjust the concrete instances’ parameters for the growth within interactively selected regions of interest (Onishi et al., 2003). This idea was resumed by Hamon et al. (2012), who made it possible not only to let the L-System grow based on contextual cues such as collisions but also to change the L-System formalism interactively, on-the-fly.

1.4. Agent-Based Approaches

The turtle interpretation of L-systems as illustrated in Figure 1 simulates an agent (the turtle) leaving a trail, thereby creating an artifact. Agents receive sensory information about their environment, process the information, and choose actions in accordance with their agenda. Such an agent-based perspective could, of course, drive the actual construction algorithm. According approaches have been proposed, for instance by Shaker et al. (2016). The authors demonstrate the concept in the context of a digger agent that leaves corridor trails with chambers at random points in a subsurface setting. They distinguish between an uninformed, “blind” agent and one that is more aware of the built environment only places new chambers that do not overlap with previously existing ones. Figure 2 shows this representative constructive agent-based example. In our implementation, both agent types had a chance of 10% of changing their direction and of 5% of creating a chamber at each step. The chamber dimensions were randomly chosen between 2 and 5 times of a single corridor cell.

Figure 2. Illustration of an agent-based constructive approach to procedural content generation. (A) When moving, the agent leaves corridor trails. (B) It changes its direction and creates chambers at randomly. (C) An uninformed agent creates overlapping chambers (in orange).

2. Swarm Grammars

In L-systems and other grammatical developmental representations, neighborhood topologies and neighborhood constraints (who informs whom and how?), are mostly embedded in production rule sets. This is different for the agent-based approach, which also explains the need for awareness about the built environment to achieve coordinated constructions (see Figure 2). Overall, the quality of agent-generated artifacts greatly depends on the ingenuity and complexity of their behavioral program and on the simulated environment.

An important advantage over grammatical representations such as L-systems is the simplicity of extending agent-based systems. Sensory information, behavioral logic, or the repertoire of actions can be easily and directly changed. Dependencies to other agents or the environment can be designed relative to the agent itself and the topology among interaction partners can evolve arbitrarily based on a modeled, possibly dynamic environment and arbitrary preceding multi-modal interactions. The inherent flexibility of agents (due to threefold design of sensing/processing/acting) facilitates the resulting system to be interactive not only with respect to a modeled environment but also to user input that is provided on-the-fly.

Swarm grammars (SGs) bring together the agent-based, interactive and the reproductive, generative perspectives (von Mammen and Jacob, 2009; von Mammen and Edenhofer, 2014). In 1980s, Reynolds published on the simulation of flocks of virtual birds, or boids (Reynolds, 1987). Each boid is typically represented as a small, stretched tetrahedron or cone to indicate its current orientation. It perceives its neighbors within a limited field of view (often a segment of a sphere), and it accelerates based on its neighbors’ relative positions and velocities. A boid’s tendency toward the neighbors’ geometric center, away from too close individuals and alignment of their velocities yields complex flock formations. Boids are very simple, so-called reactive agents that interact merely spatially. Due to their simplicity, they lend themselves well for a primary agent model to be extended by the L-system concept of generative production. As a result, SGs augment boids to leave trails in space and to differentiate and proliferate as instructed by a set of production rules.

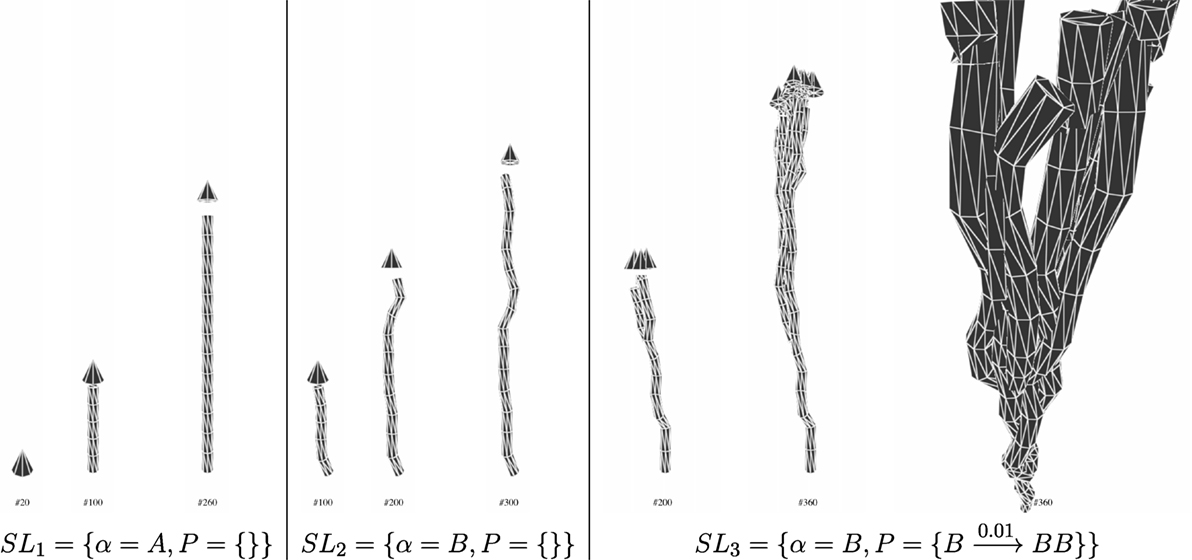

Formally speaking, a swarm grammar SG = {SL, Δ} consists of a system SL that is comprised of an axiom α and a set of production rules P, and a set of agent types or agent specifications Δ. Each specification δ ∈ Δ may determine an arbitrary set of agent features and their respective values. These features may, for instance, include specifics of the agents’ visual or spatial representation, relate to their states or behaviors. The rewrite system SL implements a probabilistic L-system as introduced in Section 1.3, whereas each symbol p of the production rules refers to the alphabet of agent specifications and all actual agent instances in the simulation are configured in accordance with their types. Different from the turtle interpretation of L-systems, tracing the movement of agents leads to structures and their reproduction yields branching. Figure 3 shows three basic swarm grammar implementations, relying on two agent types A and B. Both of them fly upwards (stepwise positional increment of 0.1 along the y-axis). In addition, B deviates at a 10% chance along the x- and z-axes at each step, with a random increment of maximal 0.5 in each direction. SL1 only deploys agent specification A, SL2 and SL3 only specification B, and SL3 also lets its agents reproduce with a probability of 1% at each step.

Figure 3. Three increasingly complex swarm grammars. The counters beneath the screenshots indicate the progression of the respective simulation. The rewrite systems SL express which agent configurations were deployed an whether and how they reproduced. The right-hand side image shows a close-up of the branched structure from SL3.

To this date, most swarm grammar implementations incorporate the flocking model by Reynolds (1987), where simple local reactive acceleration rules of spatially represented agents drive the flight formation of agent collectives. Hence, next to attributes of the agents’ display, e.g., their shape, scale, and color, the agent specifications δ also consider the parameterization of the agents’ fields of view and the coefficients that determine their accelerations with respect to their perceived neighborhoods. In particular, these coefficients weigh several different acceleration “urges.” These include one that drives an agent to the geometric center of its peers (cohesion), one that adjusts its orientation and speed toward the average velocity of its peers (alignment), one that avoids peers that are too close (separation) as well as some stochasticity. The field of view that determines the agents’ neighborhood perception is typically realized by a viewing angle and by testing proximity (within a maximal perception distance, potentially triggering uneasy closeness).

Over the years, swarm grammars have evolved in different directions, some implementations featuring agents that individually carry the production rules along in order to rewrite them based on local needs or store/retrieve them alongside the agent’s other data (von Mammen and Edenhofer, 2014). This modeling decision begs the question to identify the unique features of swarm grammars in contrast to general multi-agent systems (MAS), see, for instance Wooldridge (2009). Clearly, swarm grammars represent a subset of MAS. They can be reduced to MAS with state-changing interactions, type-changing differentiation, and reproduction. Typically swarm grammars implement spatial interaction and yield structural artifacts. In the context of interactive simulations for planning, configuration, adjustment, and exploration of biohybrid systems, swarm grammars pose an apt modeling and simulation approach due to their flexibility in terms of agent specifications, their generative expressiveness, and their capacity to (a) interact with complex virtual environments and (b) the user/operator in real time.

2.1. An Interactive Growth Model

In the context of biohybrid systems, robots influence the growth and movement of plants by exploiting their reactive behaviors and dynamic, environment-dependent states. Precise simulation of the multitude of interaction possibilities and the resulting reactions by plants is not feasible, yet. However, one can model plants and their dynamics at an abstract level which is feasible to calculate at interactive speeds and which yields plausible outcomes of the plants’ evolution. By means of swarm grammars, we can specify arbitrary agent properties, behaviors, and production rules to drive a computational developmental model. Those processes that are observed and described at the level of a plant individual represent an adequate level of abstraction for realizing interactive biohybrid system simulations. As an optimization step, groups of individuals might be subsumed and be calculated as single meta-agents (von Mammen and Steghöfer, 2014), but individual plants are the basic unit of abstraction as their influence on the biohybrid system matters. Therefore, to let interactive swarm grammars retrace biological growth and dynamics more closely, we started incorporating various behavioral processes exhibited by different plants to different degrees. Among the most common behaviors are the growth of a plant, its movement, orientation toward light, avoidance of shadow, bending, and lignification [for a general introduction, see for instance Stern et al. (2003)]. In the following paragraphs, we shed light on our implementations of these behaviors and the underlying, abstract models.

2.1.1. Articulated Plant Body

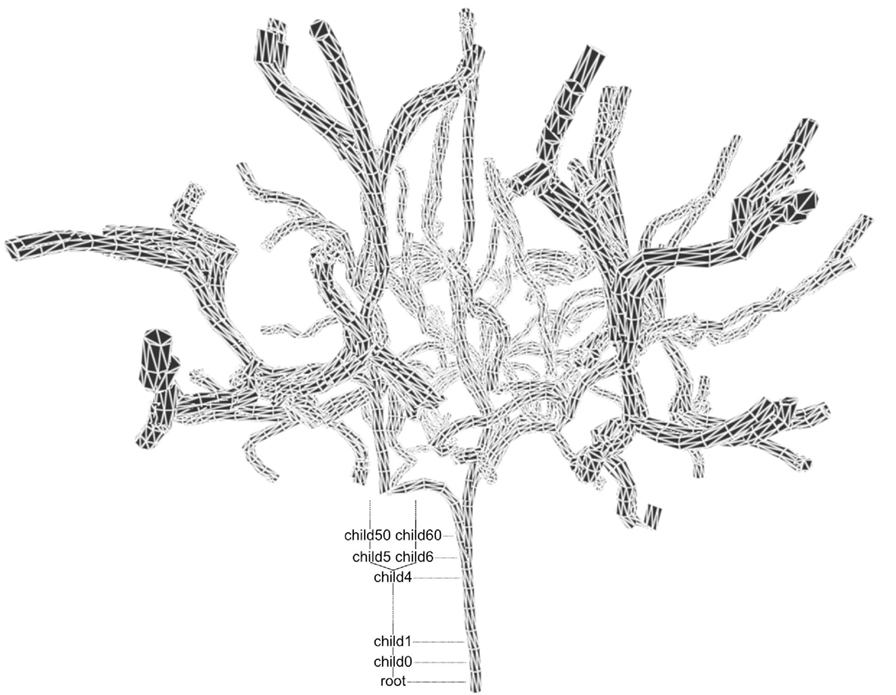

In the original swarm grammar model, there was a clear distinction between the static built artifact and the interacting, building agents (von Mammen, 2006). Later, these components were unified and arbitrary living agents or inanimate building blocks were placed by the simulated agents based on local interaction rules rather than grammatical production rules (von Mammen and Jacob, 2009). In order to retrace the dynamics of plant physiology, we decided to follow the original approach, keep the tip of our abstract plant model separate from the stem’s segments and assign very clear capabilities to these primary and secondary data objects. In order to support the dynamics arising from interdependencies between the stem’s segments, we introduced a hierarchical data structure to traverse the segments in both directions, also considering branches. This traversal is required to retrace the transport of water, sugar, and other nutrients but also to provide a physical, so-called articulated body structure. Figure 4 shows a swarm grammar with rewrite system after 660 simulation steps, whereas C extends agent B from SL2 and SL3 by means of a separation urge that accelerates away from peers that are closer than 10.0 unit. The resulting spread of the branches allows one to retrace the hierarchical data structure annotated in the figure.

Figure 4. A tree structure that unfolds after 660 steps from a swarm grammar with rewrite system and C implementing upwards flight, random movement across the xz-plane, probabilistic branching, and separation. The artifact is captured as an articulated body by means of a hierarchical data structure starting at the root segment. The labels from the root up denote the respective segments’ (referenced by the dotted horizontal lines). The perspective view slightly distorts the appearance of the uniformly scaled segments.

2.1.2. Iterative Growth

In our abstract plant model, the tip determines the direction of growth by moving upwards (gravitropism) and in accordance with the lighting situation (phototropism and shade avoidance). Growth is primarily realized by repeatedly adding segments to the plant’s body that are registered as children in the hierarchy. The conceptual translation of biological growth to this additive process follows the original swarm grammar model which is shown in Figure 3. Secondarily, the segments grow in diameter, increasing the transport throughput, which is needed to supply new growth at the tip(s) of the plant. Water is transported up the Xylem vessels to the leaves, and together with sugar produced in the leaves travels back to the roots through the Phloem cell system, see for instance Fiscus (1975). In our model, these flows are abstractly captured as the exchange of information between the segments and the resulting expansion of the plant’s body is reflected in the stem’s diameter but also in the throughput. We assigned an according variable bandwidth bi to each segment i. In order to enable growth at the tip(s) of the plant, the concrete demand of supply is communicated downstream and the segments are expanded by an increment Δb recursively from the root upwards.

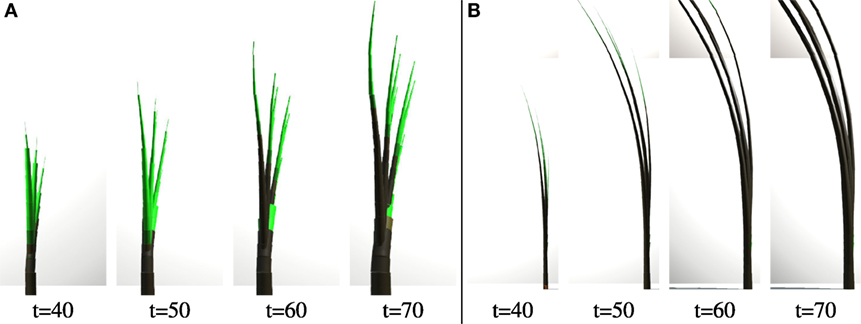

2.1.3. Lignification and Bending

In order to channel the increasing flows, the plant also needs to gain more structural integrity which is realized by the process of lignification. It means that the stem becomes more rigid and woody to gain more stability based on the deposition of lignin. We modeled this process by introducing an according state variable, stability si, for all plant segments i. Said hierarchical links between the segments make it possible to simulate the dynamics of the stem. Plants bend due to the weight of the stem, branches, flowers, and fruits. In addition, environmental interactions, e.g., exposure to wind or collisions with other plants or objects, as well as the plant changing its direction of growth, may all contribute to bending the plant stem. In our model, we only consider the plant’s own weight and the resulting forces. Other forces would need to be applied analogously. In order to achieve plausible bending of the plant in real time, we consider an individual segment’s stability si, and the segments bandwidth bi, the number of total children of the segment ni, and the position of the tip of the branch, . First, using the projection operation onto the XY plane and the unit vector eZ in Z direction, a bending target direction is calculated using equation (1). Then, incorporating the stiffness and the integration time step, we compute the new orientation, a quaternion of the segment as the linear quaternion interpolation lerp between its current orientation and the influence by all of its children as summarized in equation (2), whereas the function rotation yields a quaternion oriented toward a given vector. In Figure 5A, the process of lignification is depicted by means of a branching swarm grammar. A simple coloring scheme is directly mapped to the hierarchy to illustrate the age and the degree of lignification of the respective segments. In Figure 5B, the growth target of a swarm grammar is slightly shifted to the left. In this way, the plant bends based on its own weight:

Figure 5. Screenshots of two swarm grammars specifically illustrating lignification and bending at simulation time step t. (A) Lignification and (B) bending.

2.1.4. Phototropism and Shadow Avoidance

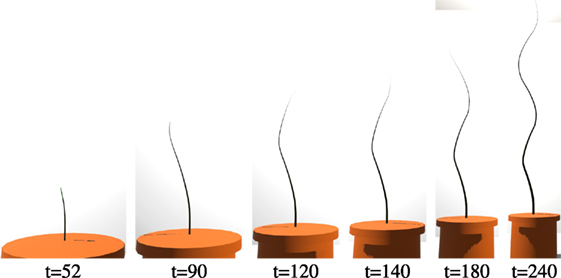

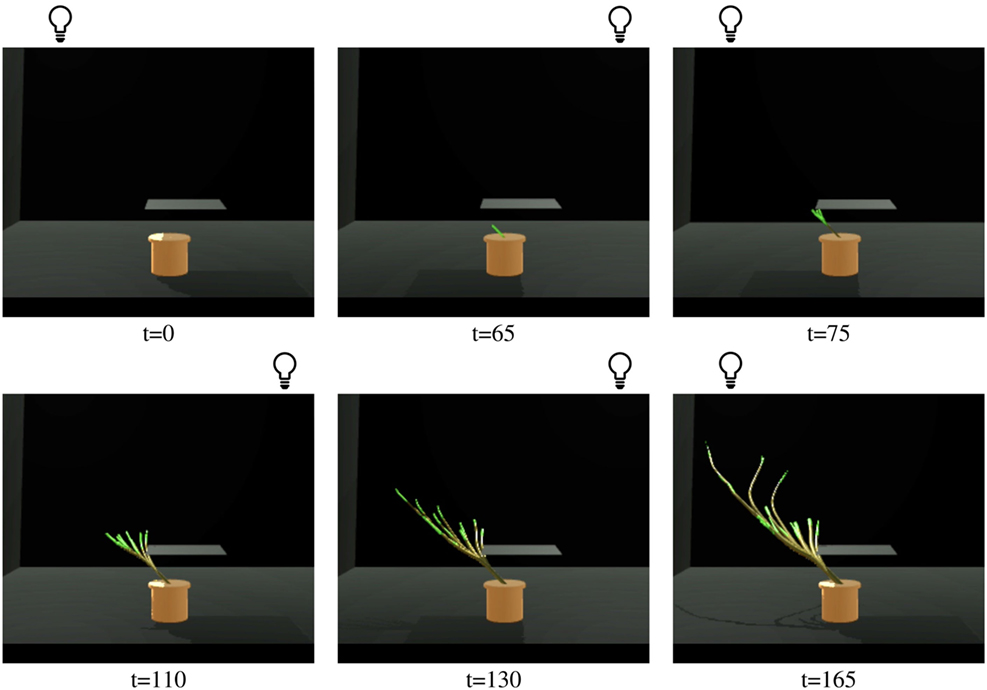

According to the basic swarm grammar implementation, we expressed branching processes as production rules. Currently, exceeding a given nutritional value triggers the respective rules. Other conditions, for instance relating to the achieved form or considering pruning activities by a gardener, may trigger productions just the same. Phototropism, i.e., the urge to grow toward light, is realized as follows. A set of light sources is iterated and, if the respective light is activated, a raycast, i.e., a projected line between the two objects, reveals whether the light shines on a given segment or not: The raycast may not collide with other objects and the angle between the light source and the segment may not exceed the angle of radiation. In this case, the distance vector to the light source down-scaled by some constant c ∈ [0, 1], the stability factor si, and the segment’s current position pi determine the segment’s re-orientation in accordance with Eqn. 3. In this way, the branch’s growth, supported by its stability, is directed toward the light source. Gravitropic response is incorporated implicitly here, instead of an additional term that is eventually blended in. Figure 6 shows the visual artifact resulting from the tandem of lignification and phototropic growth. The raycast may also reveal that the segment is not lit by a given light source—similar to determining an object’s shading based on shadow volumes, elaborated for instance by Wyman et al. (2016). In this case, the plant’s gravitropic response is overwritten by a deflection that reduces upwards growth by 75%. The result can be seen in Figure 7: at t = 0, the plant picks up a ray from the top-left light source. A few steps later, the lights are toggled, and the sideways growth gets reaffirmed. Once the shadow yielding plate is overcome, at around t = 100, the gravitropism and phototropism boost the development of the different branches of the plant:

Figure 6. Screenshots of a swarm grammar specifically illustrating phototropic growth over time. Two light sources at the top-left and top-right are alternately activated to guide the movement of the tip and thereby influence the shape of the stem.

Figure 7. Screenshots of a swarm grammar specifically illustrating shadow avoidance. The plate hovering above the plant pot effectively shields the light from the top-right light source (depicted as a sphere). Avoiding the shadow, the plant’s growth is dominated by a sidesways movement.

2.1.5. Interactivity

Currently, the interactive growth model incorporates several factors of plant behaviors including growth and branching, gravitropism, lignification, phototropism, and shadow avoidance. There are other behavioral aspects that should be included as well, especially in the context of biohybrid applications. One such aspect would be creeping, e.g., to make effective use of any scaffolding machinery. Clearly the presented model has not been tailored to fit the behaviors and features of a specific biological model plant. We are currently investigating according approaches to automatically learn the model parameters from empirical data, as outlined by Wahby et al. (2015).

The advantage of the present model over other approaches lies in its interactivity. This means, due to its algorithmic simplicity, its incremental growth procedure and the underlying data model, it works in real time. As a consequence, the model can be utilized in interactive simulations in which human users can seed plants, place obstacles, light sources, scaffolds, or robots to tend the plants. We deem this a critical aspect of simulations of biohybrid systems due to their inherent complexity: The great numbers of interacting agents, their ability to self-organize and to reach complex system regimes, also due to constant interaction with the potentially dynamic and partially self-referential environment, makes it mandatory to develop a notion of a specific system’s configuration’s impact before deployment. In the following section, we present interfaces that can harness interactive simulation models for designing and planning biohybrid systems.

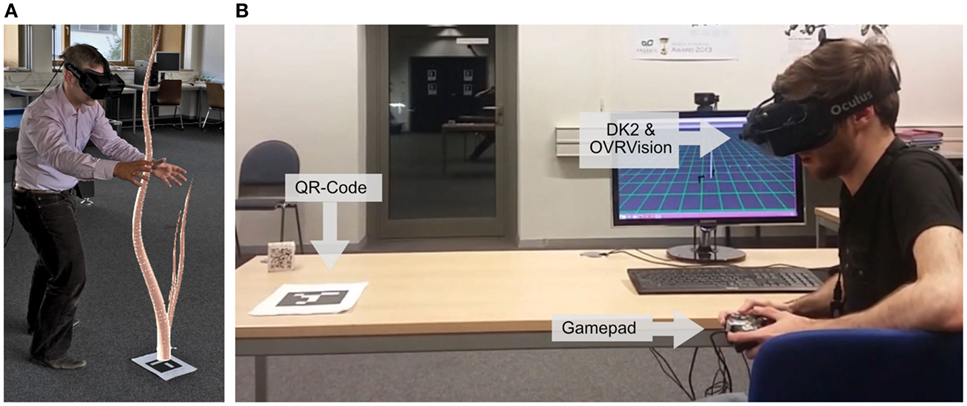

3. Robot Gardens AR

We previously presented an early prototype of an augmented reality system for designing and exploring biohybrid systems (von Mammen et al., 2016a). We refer to the underlying concept as “robot gardens” as we envision the user to be immersed in the system of robots and biological organisms and be able to tend it like a gardener—not unlike the idea conveyed by von Mammen and Jacob (2007). Planning, planting, and caring for the biohybrid “garden” has a lot in common with an actual garden, as it requires frequent attention over long time spans. Our envisioned interface for also cultivating robotic parts foresees to visually augment the objects with information about states and control programs (von Mammen et al., 2016). As implied in Figure 8A, hand and finger tracking could render it feasible to let the user interact with virtual organisms and mechanical parts like with physical objects but also programmatically (Jacob et al., 2008). In this section, we summarize our experiences with a first functional robot gardens prototype for augmented reality. It focusses on the technical feasibility and first analysis and evaluation of user interaction tasks.

Figure 8. (A) Mock-up of an augmented reality situation where a plant-like structure grows from the floor that the user can interact with. (B) Overview of the robot gardens augmented reality prototype: a stereoscopic camera (OVRVision) extends the functionality of a head-mounted virtual reality display (an Oculus DK2). The camera feed is funneled through to the DK2. Easily detectable QR-markers allow one to place virtual objects in space, at absolute coordinates. A gamepad acts as a simple control interface for the user.

3.1. Overview

Figure 8B captures the hardware setup of our first robot gardens prototype. It enhanced an Oculus DK2 virtual reality head-mounted display by means of a stereoscopic OVRVision USB camera. Information about a QR-code that appears in the video stream is extracted to maintain a point of reference with absolute coordinates. As its location and orientation relative to the user can be inferred from the QR-code image, arbitrary visual data can be overlaid on the video feed to augment reality. The user can introduce commands, for instance for placing and orienting robots or seeding plants by two means: First, the user’s head orientation is tracked by the DK2 headset. The center of the view is utilized for selection or positioning tasks in the environment. Second, the user can select and execute individual commands such as pausing/playing or fast-forwarding the simulation or (de-)activating a robot by means of a gamepad.

3.2. AR Session

Figure 9 shows the simulated content that is projected onto the video feed during simulation. In particular, one sees a pole at the center of the screen. The user has placed four “lamp-bots,” simple robots with a spot-light mounted at the top. They are oriented toward the pole. In Figure 9A, the bottom-left lamp-bot is shaded in red as it has just been placed by the user and it is still selected for further configurations, including its orientation. Figure 9B shows how the plant-like structure at the center is growing around the pole as it is only attracted by one lamp-bot at a time to describe a circular path. In Figure 9C, the user has placed a panel between the bottom-right lamp-bot and the pole as to shield the lamp-bot’s light from the plant-like structure but also to shield the plant-like structure physically from growing in this direction.

Figure 9. (A) The red lamp-bot is highlighted in red as it is currently selected, ready for reconfiguration. (B) The plant-like structure grows around the pole as it is alternately attracted by one of the lamp-bots. (C) A panel has been placed to shield the plant physically from one light source.

3.3. Usability

We conducted a short usability study to learn which aspects of our prototype work and which do not. The 12 students (22–25 years, only two with a background in computer science or related fields) were introduced to the interface and then asked to accomplish three tasks of increasing complexity. The first task was to merely place a lamp-bot within a given region. The second one required the user to orientate the lamp-bot to face a certain direction. As their third task, they needed to guide a phototropic and gravitropic growing plant-like structure around a pole—utilizing panels, lamp-bots, and configuring them. The biohybrid configuration depicted in Figure 9 is similar to some of the results created by the testers. We drew the following conclusion from this short study [detailed in von Mammen et al. (2016a)].

1. Planning and designing biohybrid systems in augmented reality is an obvious approach and easily achievable.

2. The interactions among the biohybrid agents should be visualized, e.g., the light cones of the spot-lights.

3. A tethered hardware setup poses an unwelcome challenge, even under laboratory conditions.

4. In order to provide the means of complex configurations, naturalness of the user interface needs to be increased further: gaze-based selection and positioning worked well but using a gamepad put a great cognitive load on most testers.

5. The interface can be improved by revealing additional information such as occluded lamp-bots (e.g., by rendering the ones above semi-transparently).

6. The visualization can be further improved by mapping the actual lighting conditions onto the virtual, augmented objects.

4. Virtual Realities

Our experiments confirmed that the concept of designing biohybrid systems by means of AR represents a viable approach. They also stressed that the shortcomings of tethered hardware solutions render more rigorous testing and development of such a system challenging. We are aware that recent advances in augmented reality hardware have already demonstrated that these teething troubles will soon be overcome, at affordable prices and providing reasonable processing power. However, instead of iteratively refining the augmented reality prototype, the goal to explore the design spaces of biohybrid systems can be achieved faster by means of virtual reality. Therefore, in order to explore novel spaces that opened up based on biohybrid design concepts, we decided to flesh out an according VR approach (Wagner et al., 2017). In particular, architects involved in biohybrid research [see for instance Heinrich et al. (2016)] have been investigating the idea of braided structures as they are lightweight yet strong and structurally flexible—which are important properties when aiming at results from plant-robot societies. Accordingly, for a simulation-driven virtual reality world, we adapted swarm grammar agents to braid in a self-organized manner based on cues in the local environments. We do not decide whether the plants or the robots will eventually play the role of the braiding agents or how they will be realized technically. Rather, we assume that the designer of the future will have braiding agents available and he can deploy them at will. In this section, we briefly introduce this VR system.

4.1. VR Interface

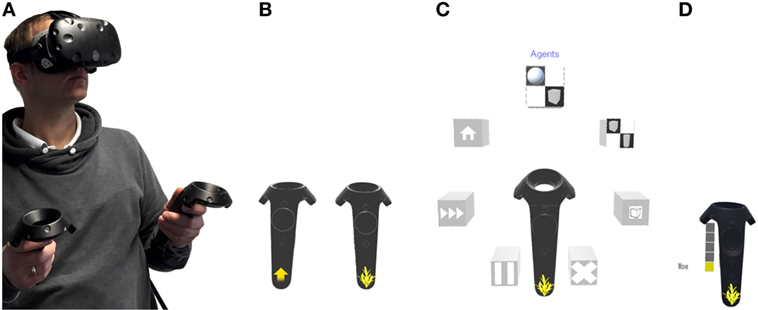

Different from AR, where the user’s natural environment is augmented by additional information—such as the information about the configuration and evolution of biohybrid systems over time—VR immerses the user into a virtual world, where even the surroundings can be of artificial origin. The greater the quality, the more natural the interactions and the faster its response, the more VR technology vanishes into the background. We say the user is more immersed, and, based on his emotional engagement, he can find himself fully present in VR (Slater and Wilbur, 1997). For the purpose of our experiment, we focused on providing the functionality needed to quickly prototype certain physical, static environments, to place and, in parts, direct braiding agents. Figure 10A shows the VR gear comprised of one head-mounted display (HMD) and two 3D controllers. The HMD is tethered to a powerful desktop computer. The positional tracking information of the devices is calculated based on two additional light-house boxes that shine from two diagonal ends of an approximately 3 m × 3 m large area. In VR, the two controllers are displayed exactly where the user would expect them and they are augmented with two small icons as to distinguish their input functionality (Figure 10B). The arrow icon indicates that this controller is used to move the user through the virtual space: pressing the flat round touch-sensitive button on the controller, an arc protrudes from the controller, intersecting with the ground. Accordingly, depending on the controller’s direction and pitch, a close-by location on the ground is selected. When the user releases the button, his view is moved to this new location instantaneously. This approach to navigation in VR has been widely adopted as any animations of movement which do not correspond to one’s actual acceleration may contribute to motion sickness (von Mammen et al., 2016b). Options to control the simulation as well as any manipulations of the environment are made available by the second controller marked with a plant-like yellow icon. If the user presses the small round button above the big round touch-sensitive field, a radial menu as seen in Figure 10C opens up around the controller. The top-centered menu item is selected if the user presses the trigger button with his index finger at the back of the 3D controller. The radial menu is rotated to the right or left by the corresponding inputs on the controller’s touch-sensitive field. In order to quickly setup and assay the environment, objects can be scaled or moved by means of the touch-sensitive field as well, if switched into the according transform mode as shown in Figure 10D.

Figure 10. (A) The virtual reality gear consists of a head-mounted display and two handheld 3D controllers. (B) Each controller is assigned with disjunct subsets of control features (left: locomotion, right: construction and control of the virtual world and agents). (C) A radial menu offers multiple interaction modes.

4.2. Braiding Agents

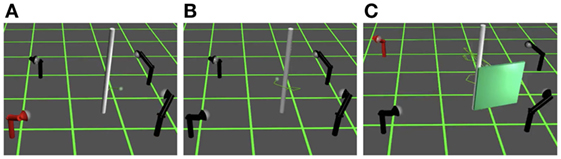

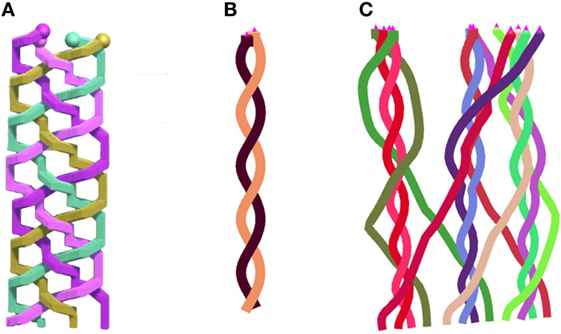

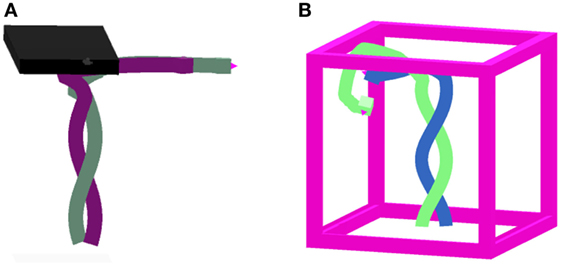

Braids are comprised of multiple threads that are pairwise interwoven. In order to create such a structure, a simple algorithm can be formulated, where a specific thread is identified based on its relative position to its neighbor threads and folded to cross them. Figure 11A shows our first approach to retrace such a centralized algorithm. In an open, biohybrid system, the agents—whether robots or biological organisms—need to act autonomously and in a self-organized fashion. Therefore, we created an according behavioral description that can be performed by each agent individually and globally results in a braided structure. In Figure 11B, the latter, self-organized approach is shown in the context of two braiding swarm grammar agents: if a neighbor is close enough, they start rotating around the axis between the two. Braids across several threads can, for instance, be achieved by (1) expanding the agents’ field of view to increase the probability to see multiple peers, (2) let the one with close-by neighbors but otherwise furthest away move toward the opposite end of the flock while the others continue as they were. As this multi-agent braid takes considerable effort in terms of velocity regulation and parameter calibration as seen in Figure 11C, we relied on a two-agent braiding function for our early experiments. In order to guide the braid agents, the user can place objects such as the plate in Figure 12A in VR space, which lets the agents deflect. Alternatively, as shown in Figure 12B, so-called braiding volumes [in analogy to “breeding volumes” used by von Mammen and Jacob (2007)] can be deployed to enclose agents within specific spaces. These braiding volumes can be placed seamlessly as seen in the next paragraphs to provide arbitrary target spaces.

Figure 11. (A) Three threads are braided from the bottom upwards. Similar to a loom, one program concerts the exact paths (von Mammen et al. 2016a). (B) Two swarm grammar agents (pink pyramids at the top) see each other and make sure to cross each other’s path in order to yield braided traces. (C) Twelve swarm grammar agents braiding together. As the parameter values are not properly adjusted yet, wider streaks seemingly occur at random.

Figure 12. (A) The SG braid agents react to their physical environment and deflect from a plate. (B) Two SG braid agents are trapped inside a braiding volume which can be used to guide their evolution (Wagner et al., 2017).

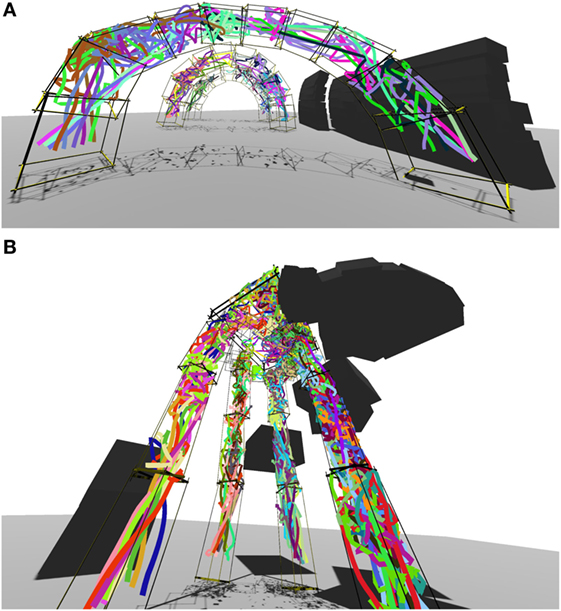

4.3. Braiding Experiments

In a first set of experiments, we asked students with computer science background as well as architecture students to test the VR braiding prototype and let their creativity roam freely, after a tutorial-based or oral, hands-on introduction to the interface and the simulation mechanics. It took the testers very little time (roughly 2–3 min) to familiarize themselves with the various aspects of the user interface. Therefore, we assume that it offered a very shallow learning curve despite the inherent complexity of the combined task of navigation, transformation, placement, and simulation control. We could also learn that both student groups were intrigued by the autonomy of the braiding agents but due to a lack of explanation, they could not fully retrace the individual agents’ behaviors in different situations. Clearly, this is one of the aspects we aim at working next. Figure 13 shows examples of braided structures that were captured during the experiments. During the subsequent interviews, especially the architecture students and one architecture professor stressed that they foresaw great potential for biohybrid systems in design and construction and that they are convinced that research toward according simulations and user interfaces is crucial for its realization. Further details are provided by (Wagner et al., 2017).

Figure 13. Braiding volumes are arranged as (A) arcs and (B) columns to guide the building swarms of agents (Wagner et al., 2017).

5. Conclusion

Biohybrid systems promise to act as enzymes, accelerating and automating various interaction cycles with nature that had previously been performed by humans. Considering time scales and the spatial distribution of robotic nodes, it is evident that biohybrid systems also have the potential to bring about completely new situations and artifacts. Simple biohybrid systems can be setup rather quickly, whereas their impact might not be evident right away. Accordingly, even for simple use cases, we are convinced that simulations should be queried to design and refine biohybrid systems. In order to render such simulations effective and accessibly support the user, they need to run at real-time speed, they need to deploy developmental data structures that grow over time and models that can be easily influenced by different kinds of interactions.

In this article, we motivated agent-based procedural content generation in the context of biohybrid simulations due to its inherent compatibility with interactive simulations. We revisited swarm grammars as a means to combine developmental processes and agent-based models. In order to use swarm grammars to drive basic plant models in interactive biohybrid simulations, we extended the previously existing models with basic botanical behavioral patterns including gravitropism, phototropism, growth, lignification, and shadow avoidance. In order to realize these model extensions, we established a hierarchical representation of the swarm grammars’ trace segments. This data structure serves as a simple means to regulate the metabolic factors during plant growth, at the same time. We showed how an augmented reality setup can deploy interactive models to inform designers during the planning stage of biohybrid simulations, and finally, we introduced a virtual reality system to explore novel design spaces that will be opening up due to biohybrid systems research and development.

Like in all other interactive simulations, the quest for of biohybrid systems is multi-facetted and, therefore, challenging. User interface designs and technologies can equally fast let the user experience deteriorate as can insufficient models or slow simulation speeds. Therefore, all the aspects presented in this article are tightly interwoven and, at the same time, all deserve more work to support biohybrid systems design and dissemination. In order to accelerate these efforts, integrated iterative research and development cycles should be established to, for instance, seamlessly integrate new mechanical and logical capabilities of robots for biohybrid systems, or to improve the predictability and accuracy of real-time plant models.

Author Contributions

SM supervised and coordinated all the works, DW implemented the VR approach to braiding agents, AK helped in model designs and experimentation, UT was responsible for the design and implementation of plant behaviors of swarm grammar agents.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer PG and handling editor declared their shared affiliation.

References

Cristea, A., and Liarokapis, F. (2015). “Fractal nature – generating realistic terrains for games,” in 7th International Conference on Games and Virtual Worlds for Serious Applications (VS-Games) (Skövde), 1–8.

Culik, K., and Lindenmayer, A. (1976). Parallel graph generating and graph recurrence systems for multicellular development. Int. J. Gen. Syst. 3, 53–66. doi: 10.1080/03081077608934737

de Campos, L. M. L., de Oliveira, R. C. L., and Roisenberg, M. (2015). “Evolving artificial neural networks through l-system and evolutionary computation,” in International Joint Conference on Neural Networks (IJCNN) (Killarney), 1–9.

Fiscus, E. L. (1975). The interaction between osmotic-and pressure-induced water flow in plant roots. Plant Physiol. 55, 917–922. doi:10.1104/pp.55.5.917

Hamon, L., Richard, E., Richard, P., Boumaza, R., and Ferrier, J.-L. (2012). Rtil-system: a real-time interactive l-system for 3d interactions with virtual plants. Virtual Real. 16, 151–160. doi:10.1007/s10055-011-0193-y

Heinrich, M. K., Wahby, M., Soorati, M. D., Hofstadler, D. N., Zahadat, P., Ayres, P., et al. (2016). “Self-organized construction with continuous building material: higher flexibility based on braided structures,” in Foundations and Applications of Self* Systems, IEEE International Workshops on (Augsburg: IEEE), 154–159.

Jacob, R. J., Girouard, A., Hirshfield, L. M., Horn, M. S., Shaer, O., Solovey, E. T., et al. (2008). “Reality-based interaction: a framework for post-wimp interfaces,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Florence: ACM), 201–210.

Kniemeyer, O., Barczik, G., Hemmerling, R., and Kurth, W. (2008). “Relational growth grammars – a parallel graph transformation approach with applications in biology and architecture,” in Applications of Graph Transformations with Industrial Relevance: Third International Symposium, AGTIVE 2007 (Kassel, Germany). Revised Selected and Invited Papers, Berlin (Heidelberg: Springer-Verlag), 152–67.

Kniemeyer, O., Buck-Sorlin, G., and Kurth, W. (2006). “Groimp as a platform for functional-structural modelling of plants,” in Functional-Structural Plant Modelling in Crop Production, eds J. Vos, L. F. M. Marcelis, P. H. B. deVisser, P. C. Struik, and J. B. Evers (Berlin: Springer), 43–52.

Kniemeyer, O., Buck-Sorlin, G. H., and Kurth, W. (2004). A graph grammar approach to artificial life. Artif. Life 10, 413–431. doi:10.1162/1064546041766451

Lindenmayer, A. (1971). Developmental systems without cellular interactions, their languages and grammars. J. Theor. Biol. 30, 455–484. doi:10.1016/0022-5193(71)90002-6

Onishi, K., Hasuike, S., Kitamura, Y., and Kishino, F. (2003). “Interactive modeling of trees by using growth simulation,” in Proceedings of the ACM Symposium on Virtual Reality Software and Technology (Osaka: ACM), 66–72.

Prusinkiewicz, P., and Hanan, J. (2013). Lindenmayer Systems, Fractals, and Plants, Vol. 79. Berlin: Springer Science & Business Media.

Prusinkiewicz, P., and Lindenmayer, A. (1996). The Algorithmic Beauty of Plants. New York: Springer-Verlag.

Rankin, J. (2015). The uplift model terrain generator. Int. J. Comput. Graph. Anim. 5, 1. doi:10.5121/ijcga.2015.5201

Reynolds, C. W. (1987). Flocks, herds, and schools: a distributed behavioral model. Comput. Graph. 21, 25–34. doi:10.1145/37402.37406

Shaker, N., Togelius, J., and Nelson, M. J. (2016). Procedural Content Generation in Games. Cham, Switzerland: Springer

Slater, M., and Wilbur, S. (1997). A framework for immersive virtual environments (five): speculations on the role of presence in virtual environments. Presence 6, 603–616. doi:10.1162/pres.1997.6.6.603

Stern, K. R., Jansky, S., and Bidlack, J. E. (2003). Introductory Plant Biology. New York: McGraw-Hill.

Tyrrell, A. M., and Trefzer, M. A. (2015). Evolution, Development and Evolvable Hardware. Berlin, Heidelberg: Springer Berlin Heidelberg, 3–25.

von Mammen, S. (2006). Swarm Grammars – A New Approach to Dynamic Growth. Technical Report, University of Calgary, Calgary, Canada.

von Mammen, S., and Edenhofer, S. (2014). “Swarm grammars GD: interactive exploration of swarm dynamics and structural development,” in ALIFE 14: The International Conference on the Synthesis and Simulation of Living Systems, eds H. Sayama, J. Rieffel, S. Risi, R. Doursat, and H. Lipson (New York, USA: ACM, MIT press), 312–320.

von Mammen, S., Hamann, H., and Heider, M. (2016a). “Robot gardens: an augmented reality prototype for plant-robot biohybrid systems,” in Proceedings of the 22Nd ACM Conference on Virtual Reality Software and Technology, VRST’16 (München: ACM), 139–142. doi:10.1145/2993369.2993400

von Mammen, S., Knote, A., and Edenhofer, S. (2016b). “Cyber sick but still having fun,” in Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology (München: ACM), 325–326.

von Mammen, S., and Jacob, C. (2007). “Genetic swarm grammar programming: ecological breeding like a gardener,” in CEC 2007, IEEE Congress on Evolutionary Computation, eds D. Srinivasan, and L. Wang (Singapore: IEEE Press), 851–858.

von Mammen, S., and Jacob, C. (2009). The evolution of swarm grammars: growing trees, crafting art and bottom-up design. IEEE Comput. Intell. Mag. 4, 10–19. doi:10.1109/MCI.2009.933096

von Mammen, S., Schellmoser, S., Jacob, C., and Hähner, J. (2016). “Modelling & understanding the human body with swarmscript. Wiley series in modeling and simulation,” in The Digital Patient: Advancing Medical Research, Education, and Practice, Chap. 11 (Hoboken, New Jersey: John Wiley & Sons), 149–170.

von Mammen, S., and Steghöfer, J.-P. (2014). “Bring it on, complexity! Present and future of self-organising middle-out abstraction,” in The Computer after Me: Awareness and Self-Awareness in Autonomic Systems (London, UK: Imperial College Press), 83–102.

Wagner, D., Hofmann, C., Hamann, H., and von Mammen, S. (2017). “Design and exploration of braiding swarms in VR,” in Proceedings of the 22Nd ACM Conference on Virtual Reality Software and Technology, VRST’17.

Wahby, M., Divband Soorati, M., von Mammen, S., and Hamann, H. (2015). “Evolution of controllers for robot-plant bio-hybdrids: a simple case study using a model of plant growth and motion,” in Proceedings of 25. Workshop Computational Intelligence (Dortmund: KIT Scientific Publishing), 67–86.

Keywords: biohybrid systems, augmented reality, virtual reality, user interfaces, biological development, generative systems

Citation: von Mammen SA, Wagner D, Knote A and Taskin U (2017) Interactive Simulations of Biohybrid Systems. Front. Robot. AI 4:50. doi: 10.3389/frobt.2017.00050

Received: 14 May 2017; Accepted: 21 September 2017;

Published: 23 October 2017

Edited by:

Kasper Stoy, IT University of Copenhagen, DenmarkReviewed by:

Sunil L. Kukreja, National University of Singapore, SingaporeAndrew Adamatzky, University of the West of England, United Kingdom

Pablo González De Prado Salas, IT University of Copenhagen, Denmark

Copyright: © 2017 von Mammen, Wagner, Knote and Taskin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sebastian Albrecht von Mammen, sebastian.von.mammen@uni-wuerzburg.de

Sebastian Albrecht von Mammen

Sebastian Albrecht von Mammen Daniel Wagner

Daniel Wagner Andreas Knote

Andreas Knote Umut Taskin

Umut Taskin